Apple has confirmed to release several accessibility features with the iOS 17 upgrade, and one of them could be the personalized voice element. As the name reflects, iPhone users will be able to create a voice sounding like their own, in just a small span of time.

The story outline begins when the tech giant revealed a bunch of new accessibility features. While every addition in this segment is useful, the personalized voice feature of iOS 17 has specifically grabbed the attention of Apple fans.

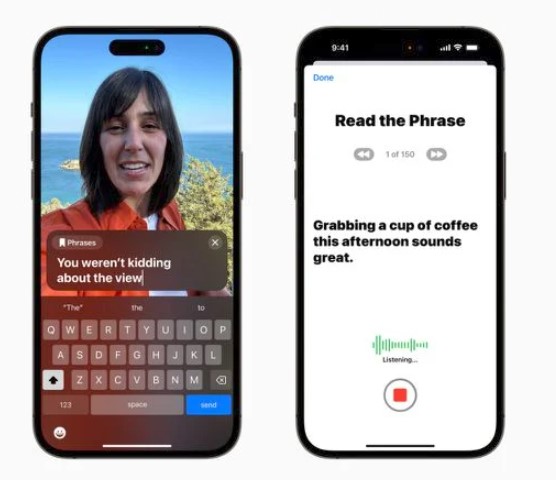

Consequently, the ultimate creation can let you create a personal voice by just reading a random set of text loudly for 15 minutes. The machine learning-based element will record the audio on the device, and further ensures privacy and security of users’ data.

That’s not it. The next feature named Live Speech integrates with the personal voice so that users can use these notes in FaceTime calls when connecting with loved ones or even while having in-person conversations.

Apple has mainly designed this feature for individuals who are facing difficulties in speaking or are at risk of losing the ability to speak. Particularly, users dealing with ALS (amyotrophic lateral sclerosis) – a disease that impacts speaking ability over time.

Follow our socials → Google News, Telegram

Consumers holding an iPhone, iPad, or newer Mac model in their hands can efficiently create a personal voice by reading a small paragraph. The tech maker mentioned that the respective tweak will be available in English language only, at the launch time.

However, we can expect additions to the dialect list in the time ahead. By that time, you can explore more features in this amazing accessibility section through the link given below.

Read More: Apple teases new iOS 17 Accessibility features before the official release